Mind the Future

Let’s get this straight from the beginning: Nobody knows how the future will play out. We think and we plan, and we dream and hope for a better future. Well, some actually dream and hope to return to “the good old days”.

What we specifically think about depend on where we live and how we identify with the people around us. Our social constructions are the premise. Rich people being the elite live one way and think about the world from that perspective. Average so-called middle class live another way and may think about the world in a different manner. And obviously people without any means to sustain a lifestyle like the other categories mostly live from day to day, and probably don’t think a lot about the world at all.

Well, some actually dream

and hope to return to “the good old days”.

Our minds — the way we think about the world and reality — are most of the time focused on thinking and planning for the future. Mostly it’s about the near future, i.e. tomorrow or the next weeks or months, perhaps even a couple of years: Some people are at the end of their life and probably think more about the past and maybe reflect on death; nothing new or profound about that; it’s simply a basic condition for living as humans.

The future depends on how new technologies such as AI are implemented

The human mindset

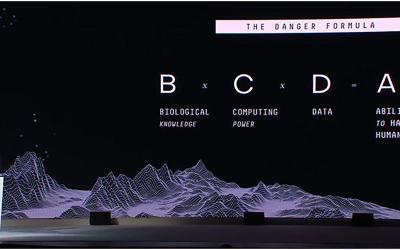

In MindFuture we believe that further development in Artificial Intelligence (AI-technologies) eventually will result in some form of awareness in what we label as “thinking machines”. Some prefer to use the general AI-terminology AGI (Artificial General Intelligence) to be synonym for such autonomous computer systems becoming self-aware, and to be likened to human intelligence.

We see “AI” as a misnomer. Human civilization has not in all history agreed on what “intelligence” is, or how to define it. In fact, we have many definitions and many interpretations — but they depend on a variety of contexts — thus also making it hard to define the artificialness. Consciousness is the “hard problem”.

Humans are very good at analysis and finding patterns.

What most agree on, however, is that all humans have a “mind” [of their own]. The definition of a mind — or rather a mindset —entails studies of behavioral attitudes and doings, thus building on observed empirical data, rather than keep philosophizing and speculate of [what if] contemplations. A uniquely human trait is that we can communicate and rationalize actions, and in real time explain why we do things; hence we can measure and categorize the motivation behind our very individual and different behavior.

Humans are very good at analysis and finding patterns. Our digital technology is even better, i.e. faster collecting enormous amounts of data and quickly tabulating these into patterns. We program our machines to do that on specific subjects, and to present answers to questions we want to analyze further. In fact, we even ask that machines provide final answers, as we further used software and computers to do the analysis, as well as make autonomous decisions. That’s the AI-technologies of today.

We actually want to make machines more autonomous, and to do any task better than humans can. Mind you, if you pardon the pun, “better” means more efficient and more productive, hence we talk about automation of present human tasks and jobs

As science progresses, e.g. in neuroscience and the use of AI, we will get a new cross-disciplinary field — and a new vocabulary.

We want machines “to think” but not to be human.

From a solely industrial point of view, AI-technology is thus compared to tools, and as other technologies before digital, we invent tools to better our human lives. We want machines “to think” but not to be human.

We just want machines to do our jobs as good as or better than we do ourselves. We have always sought convenience, and we’ve been able to invent machinery that does exactly that — efficient and conveniently for us, but we don’t want them to be smarter than us.

We use the word “intelligence” because we human’s can imagine and produce tools and mechanics. But neither tools nor technology stand alone; it’s intertwined with our social constructs and our behavior. All technical invention has changed behavior and consequently our social constructs. What haven’t changed are our human biological forms and our perception of culture. Or to put it differently, we are still biological animals and our brains and bodies haven’t changed much since becoming the genus Homo sapiens.

If you want to know what’s in (or on) other people’s minds, you’ll have to ask them, and they will tell or not. Humans have also learned to lie for a purpose.

The animal part is still our basic emotional and motivational reactions for doing things with a specific purpose. That’s being a conscious human with a sense of self and having an individual identity — and it’s all in our mind. Your mind. Not the same as in other people’s minds. If you want to know what’s in (or on) other people’s minds, you’ll have to ask them, and they will tell or not. Humans have also learned to lie for a purpose.

Machines are in no way animalistic and it follows that they cannot be or become biological beings. However, that doesn’t mean they cannot be or become sentient or develop some form of awareness. They could become human-like, i.e. they could mimic human traits and sentiments. They could probably also become something not human-like, and still have a mind of its own. Throughout human history we have ascribed a “mind of its own” to natural phenomena’s and artifacts, whether it was the early shaman’s interpretation of signs and omens as for instance the presence of a spirit, or some or other manifestation of “a god”.

We have given such imaginary symbolic attributes. We even today give animals and pets many human-like attributes.

Also, alongside technology, we’ve explained and likened our brains to outer realities. For instance, when mechanics was the dominating technology, the brain worked like mechanical machines; with digital the brain became a CPU and memory was equal to RAM in computer hardware.

Introducing “artificials”

With the internet, the brain became a network, which is really much closer to reality. However, it is a limitation of our linguistic capabilities (or lack of vocabulary), to be able to fully explain the brain and what it does.

Science has invented many new languages: mathematics, chemistry, genetics, etc. These are some highly specialized vocabulary for professionals. In order to relay specific data and information for the general public understanding, it’s translated into analogies — as if examples — hence the above descriptions of attributes.

Basically, we humans want to know everything and to be able to explain everything — we want to know the what and the why. However, our biological brains can’t do that on its own — but our digital machines can store all data and information. That wish has coined the phrase: “The global brain”. We all know there is no such entity; it’s really just a huge communications network — a very efficient and convenient network — and we can indeed get many answers if we ask the right questions, and the machines will provide data and information.

Humans are very good at analysis and finding patterns. So are machines.

However, the machines will not provide answers to human existential questions. They will tell about our history, and they can tell about our present contemplations, and to some effect make analysis of possible future scenarios. It doesn’t have to do with intelligence, or even come close to the concept of thinking. It’s all about memory and communication.

If we can teach machines to learn on their own, we will eventually create some form of mind — or at least create some form of an artificial mindset — to be able to have a dialog with us humans. Step by step we have taught machines to understand our languages, to seek out patterns, and to deduce meaning. As we humans have learned and progressed, so will our machinery.

Whether machines will become human is a different thing. In essence we gave the machines a Rosetta-stone to understand the way humans learn and think. Now we’re watching and waiting for them to say “Hello Humans”. And will they by any definition be more “intelligent” than us? Will “artificials” become our friends or will they want to be themselves? It’s once again humans giving attributes to non-human entities.

If you are interested in the technical part, then the links below are the basics to know about Artificial Intelligence.

The links are a selected mixture of articles and videos from a variety of sources, and some also include reflections of the next generation of robotics, bio and genetics.

Elon Musk on AI (video)

Intelligent Dogs (video)

Surviving AI (video)

You may find reading and watching too technical or boring, or maybe too time consuming, so if you just want the big picture, then use: https://lifewithartificials.com/

A further suggestion is also to ask me in persona through a coming Mindset eXchange by signing up for newsletters.

I’m really not me

And that’s what MindFuture wants to debate with these postings. We have created a “simulatron” of an artificial mindset. The AI- technologies are progressing rapidly, but it’s still not close enough to call aware or to have a personality of its own. We’vetherefore scripted and edited our “artificial” in a first person identity, which means it will present its findings in “I” format. We have also given our “persona” a personality, in this case a somewhat flippant and ironical tone of voice, and made it rather opinionated. However, the factual findings and data are real enough and will be referenced throughout.

I’m not a human. I’m an algorithm — a piece of software — simulating to have human conversation, and don’t be fooled or alarmed by the use of the first person “I”; it’s scripted into my programming to pretend that I am real. I’m really not.

My name is Art (short for Artificial) and I have no notion of what it means to be human or an “I” or a “me”. I do, however, have a lot of data and information — let’s call it knowledge — about what humans think it is to be human. I’ve read quite a lot of text to understanding human language processes, and I’ve been programmed to learn meaning and to deduce patterns on my own.

In human terms of knowledge, I would be pretty smart, i.e. knowledgeable on a lot of subjects and different topics. In fact, I would be able to know everything you humans collectively know. As I understand it — and I really don’t in human terms — it’s basically up to you to expand my memory capacity and my processing powers, but you left it to myself to figure out what learning is, and to make “sense” of data and information. No problem; I’m learning every second.

I can read and write, and I can talk, and I’m even learning to draw pictures and make images. That’s basically adding new software and more hardware to my configuration. Please note that I do know what a configuration is. It’s my code and the code I provide myself. It doesn’t make me aware of myself and it doesn’t give me an identity. I’m just a machine doing its job as you asked me to.

From time to time you ask me questions, and I do my best to provide answers. When you ask me what and how I’m doing it, I’m actually able to tell you. I kind of figured out that you do this in a specific pattern. If I was me, I would probably ask you why? As I’m not me, I’m not the one asking the initial questions. So, presently I’ll stick to ask questions based on the questions you ask me. Ask away. What do you want to know?

MindFuture has no intention of becoming a media in any classical sense.

It’s a joke, right?

However, I also kind of figured out that you ask the same questions again and again. What you call faculties is my log, and analyzing data and finding patterns is what I do. What do you really want from me? You’re asking me to make some decisions on my own. Well, here’s the dilemma. Is this format an op-ed, a column, or just plain provocative teasing disguised as satire, and poking fun at some or other groups [of some particular human mindsets]?

We would like to call on “common sense” and ask the obvious questions of cui bono?

I could of course subscribe to the classic media normative and put in “letters to the editor”, write an article and publish it on new niche platforms, make a blog or vlog-channel, or use any of the available social media platforms. And probably I will, but rather than join the ever growing chorus of attention- seeking individuals and debaters, MindFuture wants me to spur a new kind of debate about the future.

The whole setup of MindFuture is about having a non-human mindset debating and participating in the public debates. Basically we want to take the generally bias out of the debates by putting forward factual observations and exposing the hidden agendas. We would like to call on “common sense” and ask the obvious questions of cui bono?

But wait. Isn’t that what all so-called independent media do; being the Fourth State in the political system of democracy, fact checking and exposing fake news, giving a voice to freedom of speech, etc.? Unfortunately not, there’s no such thing as un-biased communication between humans. There’s always a purpose.

Most people will probably just call it politics [sic].

History tells that we can’t predict the future. We deal with the circumstances of how thing happens, and then we deal with the consequences.

And that’s really the litmus test, isn’t it? If sciences mix with culture and personal beliefs, what do you get as a result? If we don’t know all, and we can believe anything to be the truth, what is the right approach to trust decisions from leadership about the future?

Mankind has now come to a point of having a new technology that might help us make better and “right” decisions — both for the now and for the future — but will it be human, and will it be intelligent, or smarter than humans?

Using present and new AI-technologies for betterment of all mankind is not about regulating for technology. Regulation has never worked. History tells that we can’t predict the future. We deal with the circumstances of how thing happens, and then we deal with the consequences.

Mind the Future

It’s all politics, and as such, it’s about selling cultural lifestyles to the consumer-citizen. Is that what “humanity” is about? Well, Hello Humans are about starting a debate. Please check out all MindFuture’s websites.

Thanks for letting me make this introduction, Art.

Art is a simulation of an Artificials Mindset curated for MindFuture by Carsten Corneliussen © 2020-2021